Today while analizining some of my website to generate a good structure for resources parellelization I started to look at some examples throughout the web…

Obviously studying big websites I started analizing Mashable.com and I found something very strange in their parallelization structure.

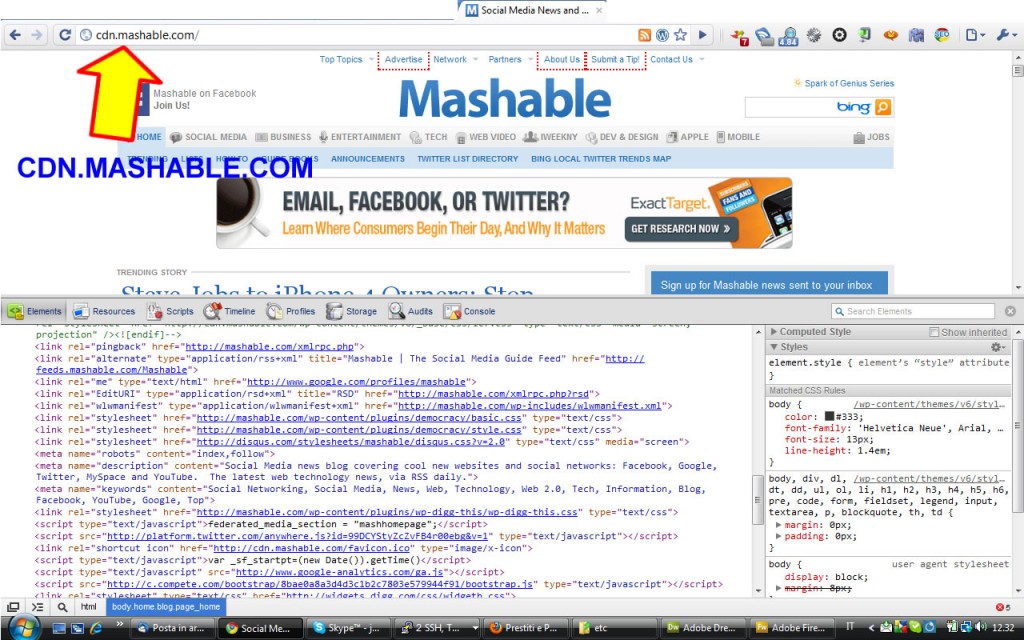

Looking their code we can see that they use a subdomain like cdn.mashable.com to deliver some static stuff and this is a good practice…

BUT try now to visit cdn.mashable.com

You will see a complete duplication of the Mashable website in the subdomain used to parallelize without any kind of noindex, nofollow meta.

I’m very surprise to see a site like that not preventing indexing or redirecting the cdn to the “canonical url”.

Looking now the website of a great SEO man (Yoast) to compare the structures we can see that his CDN (cdn.yoast.com) correctly redirect to the main page preventing every kind of duplication.

Conclusion

I’d like to know why Mashable opt for this choice and if is not an error how they prevent the risk of duplication.

I’d like to know what Yoast think about that…

You’re right.. it’s strange.

With this command on Google:

site:nomedominio.ext (without ‘www’)

we can see all (or most) Mashable subdomains indexed by Google.

I’ve found ec.mashable.com too.

Great question. Mashable has still more visitors than Yoast. Practice defeat Theory? 🙂

Mashable is bigger than Yoast’ blog.. you know, the tale says ‘content is the king’ etcetera etcetera.

@Andrea: take a look to the robots.txt file, they tried to block bots on that subdomain.

http://cdn.mashable.com/robots.txt

I hope that this new robots will solve their problem…:)

http://www.google.com/search?hl=en&q=site:cdn.mashable.com&aq=f&aqi=&aql=&oq=&gs_rfai=

but I don’t think that a robots is the best solution and configuration.

Thanks for your comment big Seo Guru !!!